Networks

It is essential that my dev environment has access into production. It is how code get’s pushed into production. So I have a Site to Site VPN into my AWS infrastructure. One of the nice things about AWS is that they actually have a VPN service and it uses standard IPSec so it can connect to just about anything.

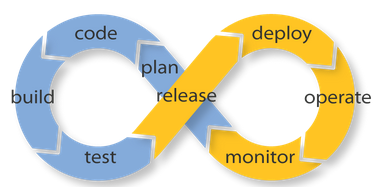

Continuous Delivery with DevOps

Continuous Delivery is not easy to get right and its hard to implement after the fact. Depending on your database this might not be fully possible at all. It is not in my environment. If I have to roll back a release, I’m going to loose data. Before I do a release I need to determine how it will affect the database. Nine times out of Ten, I have no DB changes. Most of the time If I do have a change it is mostly just adding a column, however. Sometimes I do a little bit of a refactoring and this becomes a big deal. Typically I’ll have to schedule some downtime. Even though I can’t exactly have my system automatically deploy My system architecture supports it. There’s essentially two categories for deployments.

- Deploy onto existing systems, just update your application code

- Build fresh systems (Automatically) with the latest code, once everything is ready point the load balancer to the new set of systems.

Option 2 is best thing to do. This makes your system very agile. This system can be deployed just about anywhere. Upgrading systems becomes painless. You are never worried about rebooting a machine to do some sort of system update, because you are affectively always rebooting.

It does require a different way of thinking. The VM needs to treated as something that is volatile, because nothing about it will be staying very long. Think about it as something that is disposable. First issue will be logs. You need a way to offload the logging. Second issue will be file handling. If you are keeping an archive of files these will disappear with the next release.

Centralized Logging

In my past I ran into a nice Centralized Logging system named Splunk. Splunk is great. It can aggregate logs into a central source and gives you a nice Web Based UI to search through your logs. Only issue for me, is that I’m on a budget and the free one is quite limited in the amount of logs it can handle and that is where the ELK stack comes into play. ELK stands for Elasticsearch, Logstash, and Kibana. These are three open source applications that when combined creates a great centralized logging solution.

I like running a log forwarder agent on the app server and gather not only my application logs, but the system level logs as well.

File Management

My system uses a lot of files. It downloads files from remote sources and processes them. It creates files during batch processing, and users can upload files for processing. I want to be able to archive these files. Obviously archiving on the box is a horrible idea. There are a couple of solutions though.

File Server

The easiest thing to do is to setup a file server that is static and is never destroyed. Your app servers can easily mount a share on start up. However there are some concurrency issues. 2 processes can’t write to the same file at the same time and there’s really nothing from preventing you to do so.

Amazon S3

Along the same lines of a file server, S3 can be mounted and there are also APIs that can be used to access “Objects”.

Content Repository

If you Like the idea os API access instead of File System Access, but would feel better if your files were still in an easy accessed (and backed up) system, then a Content Repository is probably for you.

System Configuration Management

This is the part confuses people about DevOps and why I get so many recruiters that say they want DevOps when they really want a System Administrator. Configuration Management in this context is also known as “Infrastructure as Code.” The theory is that instead of manually configuring a system, write a script that commands a CM tool to do it for you. This makes the process easily repeatable. There are three main tools in space (Sorry if I don’t mention your favorite). They are Puppet, Chef, and the new kid Ansible. If you are going to learn and one, I would pick Chef. Mainly Chef, because AWS can deploy system configured via Chef through their free OpsWorks tool. The configurations that you create are just files that can then be checked into source code control and versioned.

With a CM tool you can create the identically configured machines. This makes it a simple process to have a set of machines running one version of your code behind a load balance, while a second set is coming ready to switch over. Thus completing your continuous delivery pipeline. Just have your deployment script reach out to the Load Balance and have it start routing traffic to the new set of servers.

But wait there’s more

Ideally you will have your CM tool creating virtual machines basically on the fly. But vm’s are so 2014. Today we have containers. One of the first thing you will notice when creating a machine from nothing with a tool like Chef or Puppet, is how long it takes from start to finish where it is actually ready to take web hits for your app. A lot of that time creating the vm in the hypervisor, allocating disk, installing the operating system, doing updates, install installing software, and the list goes on. Containers are lightweight pre-build runtime environment just containing enough software to serve a single purpose. It runs as a process on the host system making very efficient, especially in terms of memory usage. We kinda gone full circle a bit. We used to jam everything onto a single server. Then we split everything into separate virtual machines. Now we can bring everything back onto a single server but each container is isolated and managed as a single unit.

Containers are very cost effective, because your host system can be and should be a virtual machine. Instead of paying for 10 small virtual machines you could get by with 1 or 2 large ones. It would depending on Memory and CPU usage.

Conclusion

Hopefully by now you have some insight on retrofitting a legacy project with a DevOps process. Lets recap. Steps to retrofitting your legacy project.

Step 1. Get the Tools in place

Step 2. Automate Your Build

Step 3. Automate Your Tests

Step 4. Automate Your Deployments

See a pattern here? Automation is key. Fortunately for us, we are software developers. This is what we do. We take manual processes and write software to automate them.

This has been a high level overview of a specific project of mine, but I believe its relevant for many other projects out there. Future articles are going to focus more on the technical side of things and will be more of a set of how-tos.